Last week, we talked a little bit of how we wrap our Unity app in a native iOS/Android app wrapper. For those of you familiar with Unity, you maybe thinking “Wait a second! By default, when you build for iOS/Android, Unity spits out a native iOS/Android project. What’s so special about that?” Well, we do things a little be differently.

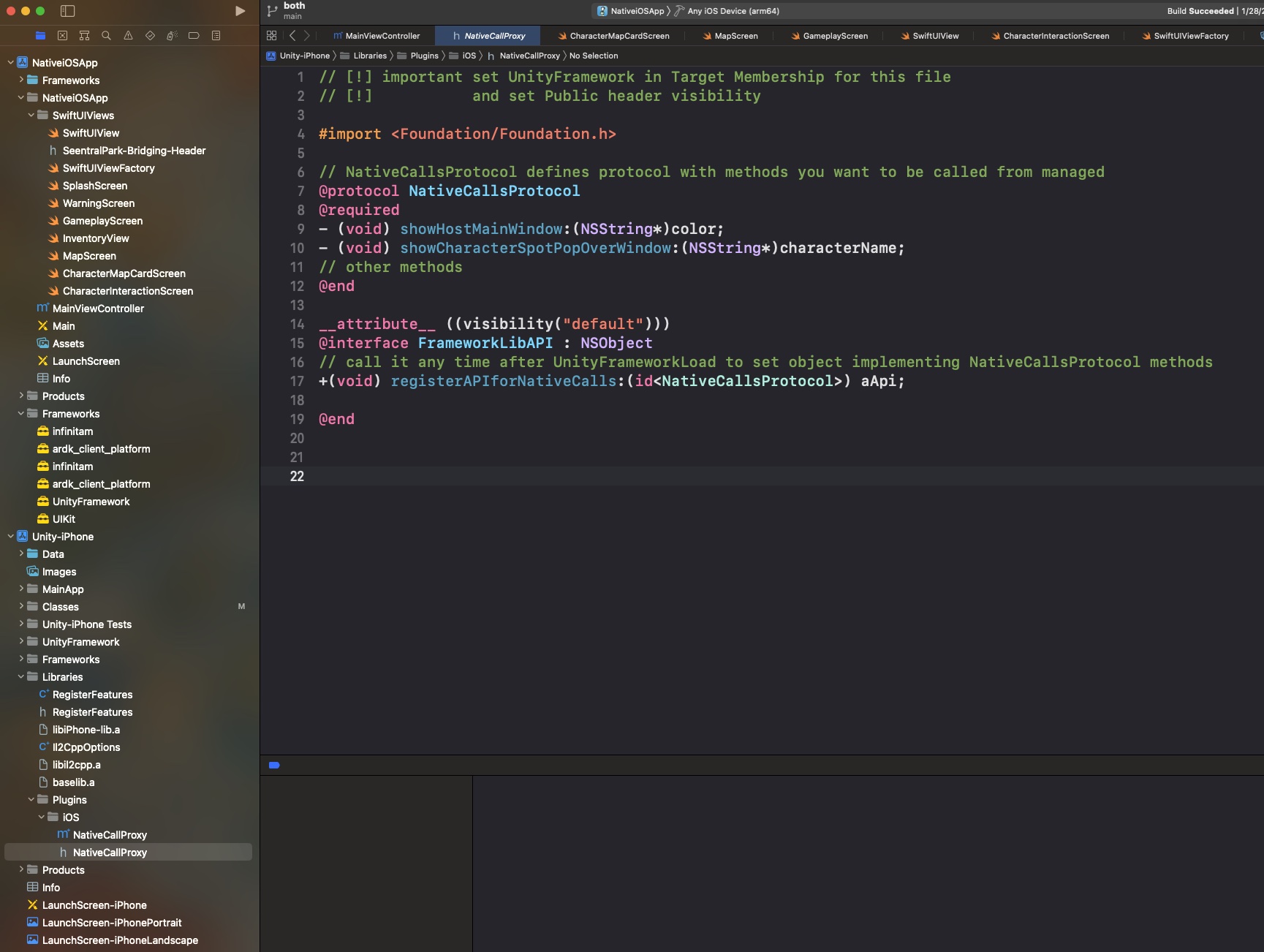

To get a better of understanding, watch this amazing talk by Ed Arenberg at the final 360|iDev conference. Basically, we’re abstracting the Unity game executable into a library. We do this by taking the Xcode project that Unity spits out, add that project into a new Xcode workspace project with it’s own root application, do some configuring in both projects, and then use a bridge to communicate between the two. That’s as nitty gritty as we’re going to get here, check out Ed’s video for more step by step, level of details.

Now, I should clarify, this is not something Ed nor I came up with. This is all based upon a sample Unity-as-a-Library project provided by Unity. A key fact is that many Unity devs have little to no native app experience, because they’re primarily game makers. They don’t know the difference between Objective-C/Swift on the iOS side or Java/Kotlin on the Android side. They want Unity to handle as much of the application configuration and setup as possible. This works since most Unity games are standalone and complete experiences. However, some Unity games are just a small part of a greater app experience, think of a cereal marketing app that has an embedded game added to it. This sample project was made more for those latter types of apps.

Now, our app is 100% a standalone Unity experience, so why go down the Unity library path. There are three strong reasons: previous native app experience, the eventuality of HMDs and wanting to look good.

We KNOW native app dev

For the past ten years, Omega Ortega has focused on native app development. We know native UI elements very well. We know their strengths, we know how to implement things very quickly and we know how to keep the UX/UI familiar to users of their preferred mobile platforms. Designing UI elements (buttons, labels, popups, overlays, etc) in Unity is not fun, not intuitive and not easy. Yes, I get for that last one, it’s probably easier for game devs than trying to do it natively. To me, it’s thinking vanilla ice cream the best despite never having any other flavor, but hey, some peeps do just like vanilla. LOL Suffice to say, we just feel more comfortable and productive making the HUD elements natively. Plus, this allows us to customize the UI based on the mobile platform. I know, for simplicity sake, many game devs believe that they don’t have to make their apps different on iPhone vs Android. It’s hard enough to build the UI, much less two versions of the same UI. However, any friction you can eliminate for a user, the better. Making your UI elements feel like all the other native apps on their phone is an absolute win for you and them.

“The HMDs are coming!”

Head Mounted Displays (HMDs) are here: Meta Quest being the most famous of them all (while primarily VR, the passthrough does allow some AR experiences). Hololens and Magic Leap (where many of my Niantic friends came from) are now on their second iteration, with Hololens likely being dead now and Magic Leap now focusing on the enterprise market. CES had a smattering of AR HMD announcements, not to mention last year Niantic even announced a consumer AR HMD, in partnership with Qualcomm and their Snapdragon platform. If you think it’s possible to annoy your users with a uni-design across the mobile phone platforms and thus you can do the same for HMDs, you’re wrong. Flat out wrong. What comes across as annoying in the back of your users mind when things don’t look or feel 100% correct on their phone, will induce claustrophobia and vomiting on an HMD. You think I jest, but I’m being completely honest. You see, when you completely cover the screen with a popup selection on a mobile device, no biggy. If the user doesn’t like it, they can just look away. If you cover their entire field of vision on an HMD, suddenly their instinctively pulling their head back to get away and they can’t. Worse still, say your popups don’t cover the entire field of vision, just about 1/3 to 1/2. The users can turn their heads and the popup will follow, but they can still look around it so that should be fine. WRONG! One thing that I learned from working on an experience for Magic Leap is that when people move their head, they expect things to stay in the real world spot they were at. You can have the popup follow the head movement on a delay, so the user’s mind can catch up. If you don’t delay long enough, move things too fast, or don’t move things at all, it makes people want to vomit. Something one should never induce with users.

By keeping the HUD and UI elements in a native layer, we should be able to swap out mobile device paradigms for HMD paradigms without having to modify the core game itself. That’ll be a big win and allow us to pick and choose which device types we want to support and do it well.

Cleaner Looking

One last thing I really like about this approach is how clean the UI looks. One thing I notice in games is how horrible some UI/HUD elements look because they’re not easy to make in Unity. Our UI elements are buttery smooth and full of life, sliding and resizing everywhere. Granted, a lot of that is due to the great design by Anthony Tanaka, the lead designer, but native tools just make it so easy to implement. Think about it, Apple and Google spend millions of dollars to make those elements look good. Why would you not want to leverage all that? To see what I mean, take a look at the brief video below.

That wraps up this (delayed) weekly addition to our Tech Note series. I hope to be a little more on time for the promised Friday release schedule, but it may take a few posts before I find my groove. I will get there though, I promise! LOL